ALMA Common Software Architecture

COMP-70.25.00.00-002-F-DSN

Version: F

Status: Draft

2009-04-15

Prepared By: | ||

Name(s) and Signature(s) | Organization | Date |

G.Chiozzi | ESO | 2009-04-15 |

Approved By: | ||

Name and Signature | Organization | Date |

Released By: | ||

Name and Signature | Organization | Date |

Change Record

Version | Date | Affected Section(s) | Change | Reason/Initiation/Remarks |

1.0/Prep.1 | 11/20/99 | All | First revision for working group internal review [RD01] and has been used as an initial input for it. | |

1.0/Prep.2 | 01/15/00 | All | Updated after group internal review | |

1.1/Prep.1 | 05/31/00 | All | Updated after discussions with NRAO and meetings with G.Chiozzi, G.Raffi, and B.Glendenning. | |

1.1/Prep.2 | 06/10/00 | All | Document renamed ALMA Common Software [RD01] as an applicable document. | |

2.0/Prep.1 | 04/11/01 | All | Updated including all comment to issue 1.1/Prep.2 and results of Kitt Peak test and the feedback from the first experiences in the usage of the ACS 0.0 prototype. | |

2.0/Prep.2 | 09/10/01 | All | Updated taking into account document's review. | |

2 | 09/26/01 | Headers and footers | Assigned document number and released officially | |

3 | 11/15/02 | All | Document updated for ALMA Internal Design Review | |

3.1 | 02/14/03 | All | Document updated after ALMA Internal Design Review | |

A | 05/31/04 | All | Applied new ALMA Template Updated for CDR-2 | |

B | 05/16/05 | All | Updated for CDR-3 | |

C | 07/29/05 | All | Updated after CDR-3 | |

D-1.23 | 04/28/06 | All | Updated for CDR-4 | |

E-1.29 | 04/30/07 | All | Updated for CDR-5 | |

F-1.35 | 06/17/08 | All | Updated for CDR-6 | |

?? | 04/16/09 | All | Converted to OpenOffice, fixing various issues with graphics and header numbers | |

| ?? | 02/02/21 | All | Converted to confluence page |

Introduction

Scope

This document describes the architecture for the ALMA Common Software (ACS), taking as applicable the requirements specified in the ALMA Common Software Technical Requirements document

[RD01]

and the ALMA Software Architecture

[RD33]

. This document provides a complete picture of the desired ACS functionality for the entire development phase, but individual concepts and features will be developed incrementally over a number of releases, according to the ALMA Common Software Development Plan

[RD32]

. For each release, a detailed plan is developed, identifying the components to be added or revised. Development priorities will be discussed with the community of users during the planning phase of each release.

This issue of the Architecture takes into account the development of the ALMA Test Interferometer Control Software, the work done by the ALMA High Level Analysis team and the requests to ACS from higher level sub-systems as appearing in their respective documents and at the ACS planning meetings. In particular contains extensions that satisfy the needs of data flow software, pipeline, offline or proposal preparation. With version A of this document, it has been decided to use ACS also as the underlying framework for the offline data reduction package (AIPS++). This requirement widens the scope of ACS and introducing new requirements that cover in particular the following areas:

- Portability

The offline data reduction is supposed to be able to run on more platforms than the ones supported now by ACS for internal ALMA development and deployment. For example MacOS-X will have probably to be supported as well as a wider palette of Linux distributions.

- Start-up time

The offline data reduction package will be used as a stand alone package and reduction executable will need a short startup time compared to applications running all the time, where the startup time is therefore not relevant

- Modular installation

It will have to be possible to install on a target machine only the subset of ACS relevant for offline data reduction usage

- Static linking of components

It will have to be possible to execute specific data reduction pipelines as a single executable and not load dynamically components.

This version of the ACS Architecture addresses many of these issues.

This document describes also some features that have not been implemented for ALMA until now and that possibly will not be implemented. It has been decided not to remove their description from the document for completeness and to make clear what is foreseen if any of these extensions needs to be implemented. These features are clearly identified in the text by the "Not implemented yet" or "Implementation not foreseen for ALMA" strings and by the usage of different fonts.

Overview

ACS is located in between the ALMA application software and other basic commercial or shared software on top of the operating systems and provides a generalized common interface between applications and the hardware in order to facilitate the implementation and the integration in the system of new hardware and software components.

ACS provides basic software services common to the various applications (like antenna control, correlator software, data pipelining)

[RD01 - 3.1.1. Scope]

and consists of software developed specifically for ACS and as well of OS builds and commercial device drivers. All code specifically developed for ACS is under the GNU Lesser General Public License (LGPL)

[RD31]

. Commercial and off the shelf packages are subject to their specific license agreement.

ACS is designed to offer a clear path for the implementation of applications, with the goal of obtaining implicit conformity to design standards and maintainable software

[RD01 - 3.1.2. Design]

. The use of ACS software is mandatory in all applications, except when the requested functionality is not provided by ACS

[RD01 - 3.1.3. Use]

. Motivated exceptions (for example based on reuse considerations) have to be discussed and approved on a case by case basis.

The main users of ACS will be the developers of ALMA applications. The generic tools and GUIs provided by ACS to access logs, Configuration Database, active objects and other components of the system will be also used by operators and maintenance staff to perform routine maintenance operations

[RD01 - 3.2.1. Users]

.

This document identifies the main packages that will be part of ACS and their high level interrelations. For each package, a section in this document respectively discusses the requirements to clarify them and presents an architectural concept.

Requirements are traced back to the ALMA Common Software Technical Requirements document

[RD01]

whenever they are referenced. An ACS Requirements Compliance Table is maintained in the ACS Software Development Plan

[RD32]

.

The concept illustrated here is based on the use of CORBA and takes into account knowledge of various control software projects based on CORBA in the astronomical and High Energy Physics communities, like SOFIA

[RD10]

, GTC-Spain

[RD11]

, ESRFGrenoble

[RD03]

, ANKA-Kalsruhe

[RD04]

etc. A lot of experience has been accumulated in the past years of ACS development, in particular for what concerns the application of these concepts to high level applications and in particular pipeline, offline data reduction and observation preparation. A lot of discussions with the AIPS++ team have helped shaping ACS based on the requirements in these application domains. It has been an initial and explicit decision of the ALMA project to use CORBA technology and at the same time to share software rather than to re-invent it. It is up to documents like this to provide elements to confirm the initial choice of CORBA as adequate.

The reasons for using CORBA are in short: Object Orientation, support for distributed systems, platform independence, it is a communication standard, it provides a variety of services.

Reference Architecture

A reference layout for the system is provided by the ALMA Software

Architecture

[RD33]

, as required by

[RD01 - 2.3. Reference Architecture]

. The Architecture of the Test Interferometer is described in the TICS Design Concept document

[RD26]

.

For the purposes of this document a distributed architecture based on computers at the individual antennas and a number of central computers, connected by an high speed backbone

[RD01 - 10.4.3. LAN]

[RD01 - 10.4.4. Backbone]

[RD01 - 10.5.11. LAN]

, is assumed

[RD02]

.

At both the antenna and the central control building there will be not only Ethernet LAN connectivity but also a Field-bus

[RD01 - 10.4.5 Field-bus]

(the AMB)

[RD01 - 10.5.12. Field-bus]

connected to various intelligent devices. The fact that the Antenna controller and all or part of these Devices is on Field-bus or LANs shall not make any difference in terms of the architecture proposed here.

Pipeline, offline data reduction and other high level applications are also assumed to be distributed over many hosts, with the need or deploying CPU intensive applications dynamically based on the available resources.

Reference Documents

The reference documents contain background information required to fully understand the structure of this document, the terminology used, the software environment in which ALMA shall be integrated and the interface characteristics to the external systems.

The following documents are referenced in this document.

[RD01] ALMA Common Software Technical Requirements, COMP-

70.25.00.00-003-A-SPE, G.Raffi, B.Glendenning, J.Schwarz (<span style="color: #0000ff"><a href="http://www.eso.org/" class="external-link" rel="nofollow">http://www.eso.org/+</a></span>![]()

<span style="color: #0000ff"><span style="text-decoration: underline; ">~almamgr/AlmaAcs/MilestoneReleases/Phase1/ACSTechReqs/Issue1.0/2000-06</span></span>![]() <span style="color: #0000ff"><span style="text-decoration: underline; ">05.pdf</span></span>

<span style="color: #0000ff"><span style="text-decoration: underline; ">05.pdf</span></span>![]() )

)![]()

[RD02] ALMA Construction Project Book, Version 5.00, 2001-08-01 (![]() <span style="color: #0000ff"><a href="http://www.mma.nrao.edu/projectbk/construction/" class="external-link" rel="nofollow">http://www.mma.nrao.edu/projectbk/construction/+</a></span>

<span style="color: #0000ff"><a href="http://www.mma.nrao.edu/projectbk/construction/" class="external-link" rel="nofollow">http://www.mma.nrao.edu/projectbk/construction/+</a></span>![]() )

)![]()

[RD03] TANGO - an object oriented control system based on CORBA

J.M.Chaize et al., ICALEPCS'99 Conference, Trieste, IT, 1999

( <span style="color: #0000ff"><a href="http://www.elettra.trieste.it/ICALEPCS99/proceedings/papers/wa2i01.pdf" class="external-link" rel="nofollow">http://www.elettra.trieste.it/ICALEPCS99/proceedings/papers/wa2i01.pdf+</a></span>

<span style="color: #0000ff"><a href="http://www.elettra.trieste.it/ICALEPCS99/proceedings/papers/wa2i01.pdf" class="external-link" rel="nofollow">http://www.elettra.trieste.it/ICALEPCS99/proceedings/papers/wa2i01.pdf+</a></span> )

)

[RD04] Implementing Distributed Controlled Objects with CORBA - M.Plesko, PCs and Particle Accelerator Control Workshop, DESY, Hamburg, 1996

(See <span style="color: #0000ff"><a href="http://kgb.ijs.si/KGB/accomplishments_articles.php" class="external-link" rel="nofollow">http://kgb.ijs.si/KGB/accomplishments_articles.php+</a></span> [ |http://kgb.ijs.si/KGB/accomplishments_articles.php]for this and other related papers).

[ |http://kgb.ijs.si/KGB/accomplishments_articles.php]for this and other related papers).

[RD05] SOSH Conventions for Control - F.DiMajoC.Watson, Software Sharing

(SOSH) for Accelerators & Physics Detectors (<span style="color: #0000ff"><a href="http://www.jlab.org/sosh/" class="external-link" rel="nofollow">http://www.jlab.org/sosh/+</a></span> )

)

[RD06] Java Home Page - (<span style="color: #0000ff"><a href="http://java.sun.com/" class="external-link" rel="nofollow">http://java.sun.com/+</a></span> )

)

[RD07] Real-time CORBA with TAO (the ACE ORB) -

( <span style="color: #0000ff"><a href="http://www.cs.wustl.edu/~schmidt/TAO.html" class="external-link" rel="nofollow">http://www.cs.wustl.edu/~schmidt/TAO.html+</a></span>

<span style="color: #0000ff"><a href="http://www.cs.wustl.edu/~schmidt/TAO.html" class="external-link" rel="nofollow">http://www.cs.wustl.edu/~schmidt/TAO.html+</a></span> )

)

[RD08] ObjectStore home page - (<span style="color: #0000ff"><a href="http://www.odi.com/objectstore/" class="external-link" rel="nofollow">http://www.odi.com/objectstore/+</a></span> )

)

[RD09] MySQL home page - (<span style="color: #0000ff"><a href="http://www.mysql.com" class="external-link" rel="nofollow">http://www.mysql.com+</a></span> )

)

[RD10] SOFIA home page - (<span style="color: #0000ff"><a href="http://sofia.arc.nasa.gov/" class="external-link" rel="nofollow">http://sofia.arc.nasa.gov/+</a></span> )

)

[RD11] GTC home page - (<span style="color: #0000ff"><a href="http://www.gtc.iac.es/" class="external-link" rel="nofollow">http://www.gtc.iac.es/+</a></span> )

)

[RD12] ALMA Monitor and Control Bus, Interface

Specification, ALMAComputing Memo #7, M.Brooks, L.D'Addario,Rev.B 200102-05

[RD13] National Instruments LabVIEW- (<span style="color: #0000ff"><a href="http://www.ni.com/labview/" class="external-link" rel="nofollow">http://www.ni.com/labview/+</a></span> )

)

[RD14] CORBA Telecom Log Service -

( <span style="color: #0000ff"><a href="http://www.omg.org/technology/documents/formal/telecom_log_service.htm" class="external-link" rel="nofollow">http://www.omg.org/technology/documents/formal/telecom_log_service.htm+</a></span>

<span style="color: #0000ff"><a href="http://www.omg.org/technology/documents/formal/telecom_log_service.htm" class="external-link" rel="nofollow">http://www.omg.org/technology/documents/formal/telecom_log_service.htm+</a></span> )

)

[RD15] omniORB Home Page - (<span style="color: #0000ff"><a href="http://www.uk.research.att.com/omniORB/" class="external-link" rel="nofollow">http://www.uk.research.att.com/omniORB/+</a></span> )

)

[RD16]OpenOrb Home Page - (<span style="color: #0000ff"><a href="http://openorb.sourceforge.net/" class="external-link" rel="nofollow">http://openorb.sourceforge.net/+</a></span> )

)

[RD17]ALMA SE Practices - Software Development Process Methodology

and Tools, G.Chiozzi, R.Karban, P.Sivera -

( <span style="color: #0000ff"><a href="http://www.mma.nrao.edu/development/computing/docs/joint/draft/SE" class="external-link" rel="nofollow">http://www.mma.nrao.edu/development/computing/docs/joint/draft/SE+</a></span>

<span style="color: #0000ff"><a href="http://www.mma.nrao.edu/development/computing/docs/joint/draft/SE" class="external-link" rel="nofollow">http://www.mma.nrao.edu/development/computing/docs/joint/draft/SE+</a></span> <span style="color: #0000ff"><span style="text-decoration: underline; ">SwDev.pdf</span></span>

<span style="color: #0000ff"><span style="text-decoration: underline; ">SwDev.pdf</span></span> )

)

[RD18] eXtensible Markup Language Home Page -

( <span style="color: #0000ff"><a href="http://www.w3.org/XML/" class="external-link" rel="nofollow">http://www.w3.org/XML/+</a></span>

<span style="color: #0000ff"><a href="http://www.w3.org/XML/" class="external-link" rel="nofollow">http://www.w3.org/XML/+</a></span> )

)

[RD19] CERN Laser project home page (<span style="color: #0000ff"><a href="http://proj-laser.web.cern.ch/proj" class="external-link" rel="nofollow">http://proj-laser.web.cern.ch/proj+</a></span> <span style="color: #0000ff"><span style="text-decoration: underline; ">laser/</span></span>

<span style="color: #0000ff"><span style="text-decoration: underline; ">laser/</span></span> )

)

[RD20] IBM DB2 Home Page - (<span style="color: #0000ff"><a href="http://www-4.ibm.com/software/data/db2/" class="external-link" rel="nofollow">http://www-4.ibm.com/software/data/db2/+</a></span> )

)

[RD21] ALMA ACS and AMS Kitt Peak 2000 Test ,G.Chiozzi et al.

( <span style="color: #0000ff"><a href="http://www.mma.nrao.edu/development/computing/docs/joint/notes/2000-12" class="external-link" rel="nofollow">http://www.mma.nrao.edu/development/computing/docs/joint/notes/2000-12+</a></span>

<span style="color: #0000ff"><a href="http://www.mma.nrao.edu/development/computing/docs/joint/notes/2000-12" class="external-link" rel="nofollow">http://www.mma.nrao.edu/development/computing/docs/joint/notes/2000-12+</a></span> <span style="color: #0000ff"><span style="text-decoration: underline; ">KP.pdf</span></span>

<span style="color: #0000ff"><span style="text-decoration: underline; ">KP.pdf</span></span> )

)

[RD22]Design and Initial Implementation of Diagnostic and Error Reporting System of SMA, SMA Technical Memo 132, Q.Zhang.

[RD23] The Adaptive Communication Environment (ACE) home page -

( <span style="color: #0000ff"><a href="http://www.cs.wustl.edu/~schmidt/ACE.html" class="external-link" rel="nofollow">http://www.cs.wustl.edu/~schmidt/ACE.html+</a></span>

<span style="color: #0000ff"><a href="http://www.cs.wustl.edu/~schmidt/ACE.html" class="external-link" rel="nofollow">http://www.cs.wustl.edu/~schmidt/ACE.html+</a></span> )

)

[RD24] Python language home page - ( <span style="color: #0000ff"><a href="http://www.python.org/" class="external-link" rel="nofollow">http://www.python.org/+</a></span>

<span style="color: #0000ff"><a href="http://www.python.org/" class="external-link" rel="nofollow">http://www.python.org/+</a></span> )

)

[RD25] Home Page for the Official Tcl/Tk Contributed Sources Archive - ( <span style="color: #0000ff"><a href="http://www.neosoft.com/tcl/" class="external-link" rel="nofollow">http://www.neosoft.com/tcl/+</a></span>

<span style="color: #0000ff"><a href="http://www.neosoft.com/tcl/" class="external-link" rel="nofollow">http://www.neosoft.com/tcl/+</a></span> )

)

[RD26] Test Interferometer Control Software Design

Concept, B.Glendenning et al., DRAFT 2001-02-15

[RD27] Advanced CORBA Programming with C++,M.HenningS.Vinoski, Addison-Wesley, 1999

[RD28] ALMA Software Glossary, COMP-70.15.00.00-003-A-GEN

( <span style="color: #0000ff"><a href="http://www.alma.nrao.edu/development/computing/docs/joint/draft/Glossary.htm" class="external-link" rel="nofollow">http://www.alma.nrao.edu/development/computing/docs/joint/draft/Glossary.htm+</a></span>

<span style="color: #0000ff"><a href="http://www.alma.nrao.edu/development/computing/docs/joint/draft/Glossary.htm" class="external-link" rel="nofollow">http://www.alma.nrao.edu/development/computing/docs/joint/draft/Glossary.htm+</a></span>

)

[RD29] AMI/ACS Report, R. Lemke, G. Chiozzi 2001-03-20

( <span style="color: #0000ff"><a href="http://www.eso.org./projects/alma/develop/acs/examples/amitest/AmiReport.pdf" class="external-link" rel="nofollow">http://www.eso.org./projects/alma/develop/acs/examples/amitest/AmiReport.pdf+</a></span>

<span style="color: #0000ff"><a href="http://www.eso.org./projects/alma/develop/acs/examples/amitest/AmiReport.pdf" class="external-link" rel="nofollow">http://www.eso.org./projects/alma/develop/acs/examples/amitest/AmiReport.pdf+</a></span> )

)

[RD30] ALMA Memo #298, Timing and Synchronization, L. DÂ'Addario,

2000-03-09 (<span style="color: #0000ff"><a href="http://www.alma.nrao.edu/memos/html" class="external-link" rel="nofollow">http://www.alma.nrao.edu/memos/html+</a></span> <span style="color: #0000ff"><span style="text-decoration: underline; ">memos/alma298/memo298.pdf</span></span>

<span style="color: #0000ff"><span style="text-decoration: underline; ">memos/alma298/memo298.pdf</span></span> )

)

[RD31] GNU Lesser General Public License (GPL)

( <span style="color: #0000ff"><a href="http://www.gnu.org/copyleft/lesser.html" class="external-link" rel="nofollow">http://www.gnu.org/copyleft/lesser.html+</a></span>

<span style="color: #0000ff"><a href="http://www.gnu.org/copyleft/lesser.html" class="external-link" rel="nofollow">http://www.gnu.org/copyleft/lesser.html+</a></span> )

)

[RD32] ALMA Common Software Development Plan, COMP-70.25.00.00001-C-PLA (<span style="color: #0000ff"><a href="http://www.eso.org/projects/alma/develop/acs/Plan/index.html" class="external-link" rel="nofollow">http://www.eso.org/projects/alma/develop/acs/Plan/index.html+</a></span> )

)

[RD33] ALMA Software Architecture, ALMA-70.15.00.00-001-I-GEN,

J.Schwarz

( <span style="color: #0000ff"><a href="http://almaedm.tuc.nrao.edu/forums/alma/dispatch.cgi/Architecture/docProfile/10" class="external-link" rel="nofollow">http://almaedm.tuc.nrao.edu/forums/alma/dispatch.cgi/Architecture/docProfile/10+</a></span>

<span style="color: #0000ff"><a href="http://almaedm.tuc.nrao.edu/forums/alma/dispatch.cgi/Architecture/docProfile/10" class="external-link" rel="nofollow">http://almaedm.tuc.nrao.edu/forums/alma/dispatch.cgi/Architecture/docProfile/10+</a></span>

<span style="color: #0000ff"><span style="text-decoration: underline; ">0017/d20021117183329/No/ALMASoftwareArchitecture.pdf</span></span> )

)

[RD34]JacORB Home Page (<span style="color: #0000ff"><a href="http://www.jacorb.org/" class="external-link" rel="nofollow">http://www.jacorb.org/+</a></span> )

)

[RD35] Eclipse Home Page (<span style="color: #0000ff"><a href="http://www.eclipse.org/" class="external-link" rel="nofollow">http://www.eclipse.org/+</a></span> )

)

[RD36] Castor Home Page (<span style="color: #0000ff"><a href="http://castor.codehaus.org/" class="external-link" rel="nofollow">http://castor.codehaus.org/+</a></span> )

)

[RD37] OPUS Home Page (<span style="color: #0000ff"><a href="http://www.stsci.edu/software/OPUS/bb.html" class="external-link" rel="nofollow">http://www.stsci.edu/software/OPUS/bb.html+</a></span> )

)

[RD38] Rational Rose Home Page (<span style="color: #0000ff"><a href="http://www.rational.com/" class="external-link" rel="nofollow">http://www.rational.com/+</a></span> )

)

[RD39] Open ArchitectureWare project home page

( <span style="color: #0000ff"><a href="http://sourceforge.net/projects/architecturware/" class="external-link" rel="nofollow">http://sourceforge.net/projects/architecturware/+</a></span>

<span style="color: #0000ff"><a href="http://sourceforge.net/projects/architecturware/" class="external-link" rel="nofollow">http://sourceforge.net/projects/architecturware/+</a></span> )

)

[RD40] Java Web Start home page (<span style="color: #0000ff"><a href="http://java.sun.com/products/javawebstart/" class="external-link" rel="nofollow">http://java.sun.com/products/javawebstart/+</a></span> )

)

[RD41] ALMA Common Software home page

( <span style="color: #0000ff"><a href="http://www.eso.org/projects/alma/develop/acs/" class="external-link" rel="nofollow">http://www.eso.org/projects/alma/develop/acs/+</a></span>

<span style="color: #0000ff"><a href="http://www.eso.org/projects/alma/develop/acs/" class="external-link" rel="nofollow">http://www.eso.org/projects/alma/develop/acs/+</a></span> )

)

[RD42] CORBA Audio Video Streaming Service

( <span style="color: #0000ff"><a href="http://www.omg.org/technology/documents/formal/audio.htm" class="external-link" rel="nofollow">http://www.omg.org/technology/documents/formal/audio.htm+</a></span>

<span style="color: #0000ff"><a href="http://www.omg.org/technology/documents/formal/audio.htm" class="external-link" rel="nofollow">http://www.omg.org/technology/documents/formal/audio.htm+</a></span> )

)

[RD43] The design and performance of a CORBAAudio/Video Streaming

ServiceCORBA Audio Video Streaming Service , D.C.Schmidt et al.,

( <span style="color: #0000ff"><a href="http://www.cs.wustl.edu/~schmidt/PDF/av.pdf" class="external-link" rel="nofollow">http://www.cs.wustl.edu/~schmidt/PDF/av.pdf+</a></span>

<span style="color: #0000ff"><a href="http://www.cs.wustl.edu/~schmidt/PDF/av.pdf" class="external-link" rel="nofollow">http://www.cs.wustl.edu/~schmidt/PDF/av.pdf+</a></span> )

)

[RD44] ARCUS Error Handling for Business Information Systems, K.Renzel, sd&m Muenchen, 2003

( <span style="color: #0000ff"><a href="http://www.eso.org/~almamgr/AlmaAcs/OnlineDocs/ARCUSErrorHandling.pdf" class="external-link" rel="nofollow">http://www.eso.org/~almamgr/AlmaAcs/OnlineDocs/ARCUSErrorHandling.pdf+</a></span>

<span style="color: #0000ff"><a href="http://www.eso.org/~almamgr/AlmaAcs/OnlineDocs/ARCUSErrorHandling.pdf" class="external-link" rel="nofollow">http://www.eso.org/~almamgr/AlmaAcs/OnlineDocs/ARCUSErrorHandling.pdf+</a></span> )

)

[RD45] EVLA Engineering Software Requirement, B.Butler et. al. EVLA-SW004, Rev. 1.4, 2003

[RD46] EVLA Array Operations Software Requirements, J.Campbel et. al. EVLA-SW-003, Rev. 2.5, 2003

[RD47] JFreeChart (<span style="color: #0000ff"><a href="http://www.jfree.org/jfreechart/" class="external-link" rel="nofollow">http://www.jfree.org/jfreechart/+*</a></span> )

)

[RD48] MatplotlibJFreeChart

( <span style="color: #0000ff"><a href="http://matplotlib.sourceforge.net/installing.html" class="external-link" rel="nofollow">http://matplotlib.sourceforge.net/installing.html+*</a></span>

<span style="color: #0000ff"><a href="http://matplotlib.sourceforge.net/installing.html" class="external-link" rel="nofollow">http://matplotlib.sourceforge.net/installing.html+*</a></span> )

)

Glossary

An extended list of glossary definitions, abbreviations and acronyms is part of the main ALMA Software Glossary

[RD28]

, available online at the following URL: {+}http://www.mma.nrao.edu/development/computing/docs/joint/draft/Glossary.htm+.

The following list of abbreviations and acronyms is aimed to help the reader in recalling the extended meaning of the most important short expressions used in this document:ABM Antenna Bus Master ACE ADAPTIVE Communication Environment ({+}http://www.cs.wustl.edu/~schmidt/ACE.html+) ACS ALMA Common Software ACU Antenna Control Unit AIPS++ Astronomical Information Processing System ({+}http://aips2.nrao.edu/docs/aips++.html+) ALMA Atacama Large Millimeter Array ({+}http://www.eso.org/projects/alma/+) AMB ALMA Monitor and Control Bus ANKA Synchrotron Radiation Source ANKA ({+}http://www.fzk.de/anka+) API Application Programmatic Interface CAN Controller Area Network CORBA

Common Object Request Broker Architecture

COTS

Commercial Off The Shelf

CPU

Central Processing Unit

ESO

European Southern Observatory ({+}http://www.eso.org+)

FITS

Flexible Image Transport Format

GUI

Graphical User Interface

GTC

Gran Telescopio CANARIAS ({+}http://www.gtc.iac.es/+)

HW

Hardware

IDL

CORBA Interface Definition Language

IIOP

Internet Inter-ORB Protocol

ISO

International Standardization Organisation

JDBC

Java Database Connectivity

LAN

Local Area Network

LCU

Local Control Unit

M&C

Monitor and Control

N/A

Not Applicable

NRAO

National Radio Astronomy Observatory ({+}http://www.nrao.edu/+)

OMG

Object Management Group ({+}http://www.omg.org/+)

ORB

Object Request Broker

OSI

Open Systems Interconnection

OVRO

Owens Valley Radio Observatory ({+}http://www.ovro.caltech.edu/+)

RDBMS

Relational Data Base Management System

RPC

Remote Procedure Call

SLA

Subprogram Library A (Positional Astronomy Library)

SW

Software

TAO

The ACE ORB ({+}http://www.cs.wustl.edu/~schmidt/TAO.html+)

TBC

To Be Confirmed

TBD

To Be Defined

TCL

Tool Command Language ({+}http://www.scriptics.com/resource/+)

TCL

(CORBA)CORBA Trader Constraint Language

TICS

ALMA Test Interferometer Control Software

TPOINT Telescope Pointing Analysis System UML Unified Modeling Language URI Uniform Resource Identifier ({+}http://www.w3.org/Addressing/+) URL Uniform Resource Locator ({+}http://www.w3.org/Addressing/+) UTC Universal Time Coordinated VME Versa Module Eurocard VLT Very Large Telescope WS Workstation XML eXtensible Markup Language ({+}http://www.w3.org/XML/+) ACS Basic Architecture

Overview

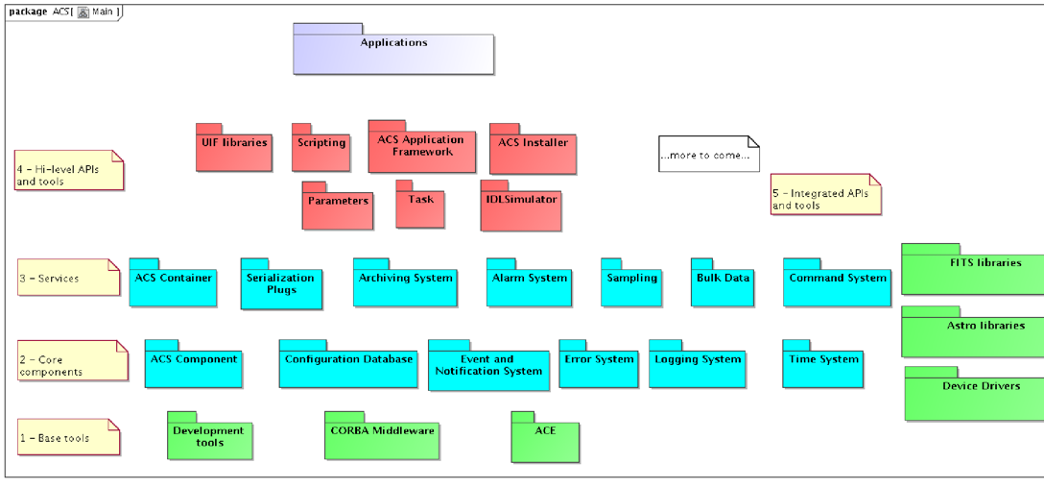

The ALMA Common Software (ACS) is located in between the ALMA application software (Applications) and other basic commercial or shared software on top of the operating systems. In particular, ACS is based on CORBA (CORBA Middleware), which provides the whole infrastructure for the exchange of messages between distributed objects. Whenever possible, ACS features will be provided using off the shelf components and ACS itself will provide the packaging and the glue between these components.

The ACS is also based on an Object Oriented architecture[RD01 - 13.1.1 Distributed Objects and commands]

The following UML Package Diagram shows the main packages in which ACS has been subdivided.

Figure 2.1: ACS Packages

Each package provides a basic set of services and tools that shall be used by all ALMA applications.

Packages have been grouped in 4 layers. Packages are allowed to use services provided by other packages on the lower layers and on the same layer, but not on higher layers.

A 5th group contains packages for software that is used by many ALMA Subsystems, but that is not used by other ACS packages. These packages are for convenience integrated and distributed together with ACS but are not integral parts of ACS.

A brief description of the layers and the packages is provided hereafter, while the next chapter will contain a detailed description of the features included in the packages.

- - Base Tools

The bottom layer contains base tools that are distributed as part of ACS to provide a uniform development and run time environment on top of the operating system for all higher layers and applications. These are essentially off-the-shelf components and ACS itself just provides packaging and installation and distribution support. This ensures that all installations of ACS (development and run-time) will have the same basic set of tools with versions kept under configuration control.

The exact set of tools and versions are described in the documentation coming with each ACS release. Being these normally big packages the ACS installation procedures will have to offer the options of installing the tools in binary format, building them from sources or using an independent installation.

Three main packages have been identified in this layer:

- Development tools

Software development tools (compilers, configuration controls tools, languages, debuggers, documentation tools).

- CORBA Middleware

Packaging of off-the-shelf CORBA implementations (ORB and services) to cover the languages and operating systems supported by ACS.

- ACE

Distribution of the Adaptive Communication Environment

[RD23]

.

- - Core components

This second layer provides essential components that are necessary for the development of any application

- ACS Component

Base interfaces and classes for Component part of the ACS Component Model. In particular C++ Distributed Objects, Properties and Characteristics are implemented in this package.

- Configuration Database

Interfaces and basic implementation for the Configuration Database from where ACS Components retrieve their initial configuration

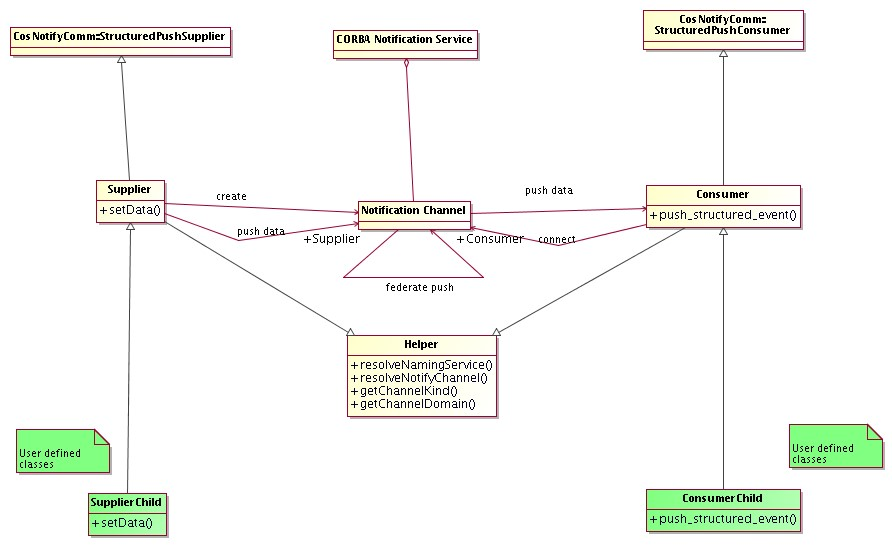

- Event and Notification System

The Event and Notification System provides a generic mechanism to asynchronously pass information between data publishers and data subscribers, in a many-to-many relation scheme.

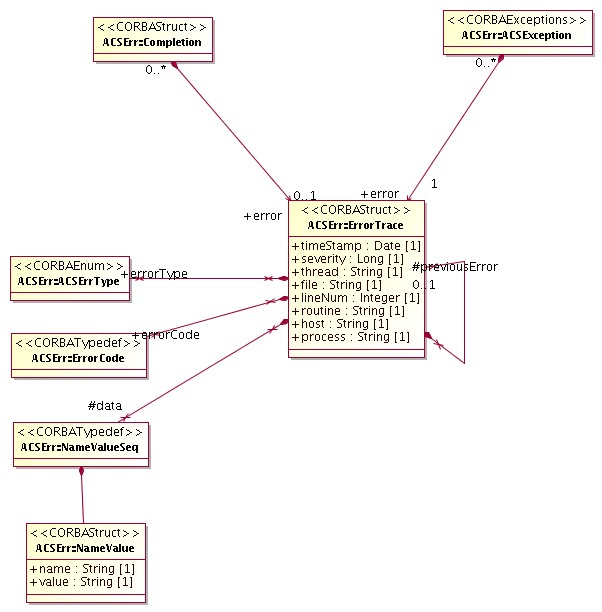

- Error System

API for handling and logging run-time errors, tools for defining error conditions, tools for browsing and analyzing run-time errors.

- Logging System

API for logging of data, actions and events. Transport of logs from the producer to the central archive. Tools for browsing logs.

- Time System

Time and synchronization services.

- - Services

The third layer implements services that are not strictly necessary for the development of prototypes and test applications or that are meant to allow optimization of the performances of the system:

- ACS Container

Design patterns, protocols and high level services for Component/Container life-cycle management.

- Serialization Plugs

This package provides a generic mechanism to serialize entity data between high level applications, typically written in Java.

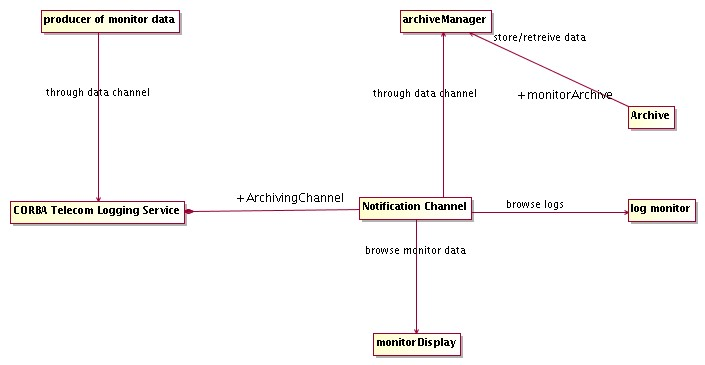

- Archiving System

API and services for archiving monitoring data and events from the run time system. Tools to browse, monitor and administer the flow of data toward the archive.

- Command System

Tools for the definition of commands, API for run-time command syntax checking, API and tools for dynamic command invocation.

- Alarm System

API and tools for configuration of hierarchical alarm conditions, API for requesting notification of alarms at the application level, tools for displaying and handling the list of active alarms.

- Sampling

Low level engine and high level tools for fast data sampling (virtual oscilloscope).

- Bulk Data

API and services for the transport of bulk science data (images or big data files) and continuous data streaming.

- - API and High-level tools

The fourth and last layer provides high level APIs and tools. More will be added in the future. The main goals for these packages is to offer a clear path for the implementation of applications, with the goal of obtaining implicit conformity to design standards and maintainable software

[RD01 - 3.1.2. Design]

.

- UIF Libraries

Development tools and widget libraries for User Interface development .

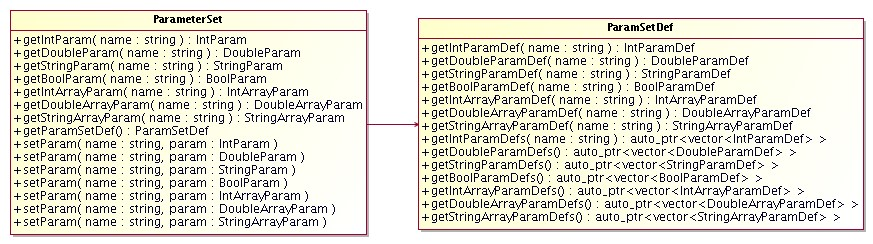

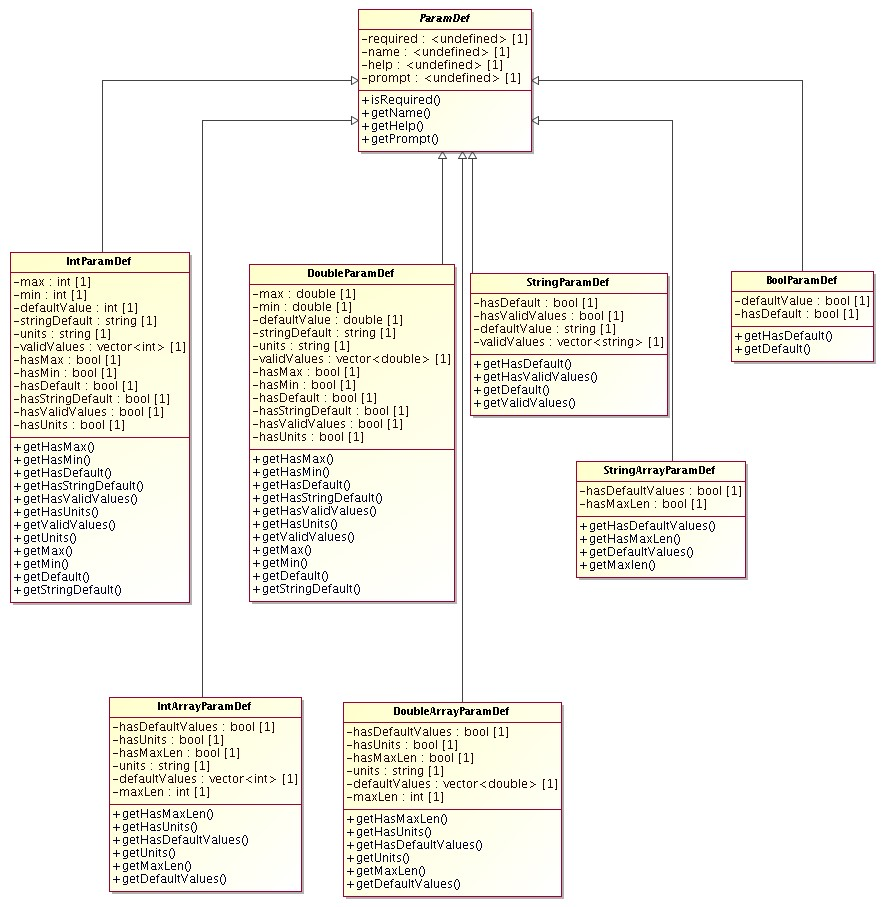

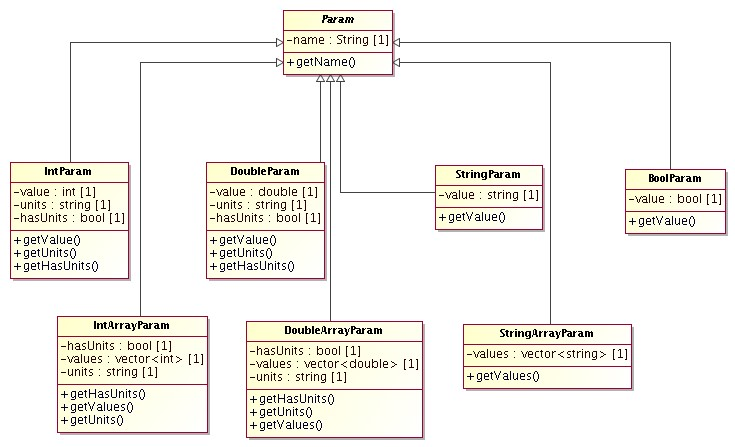

- Parameters

Support libraries and classes for "parameter sets", i.e support for definition, parsing and validation of sets of parameters, plus some additional metadata such as help information, valid ranges, default values, whether the parameters are required or optional, etc.

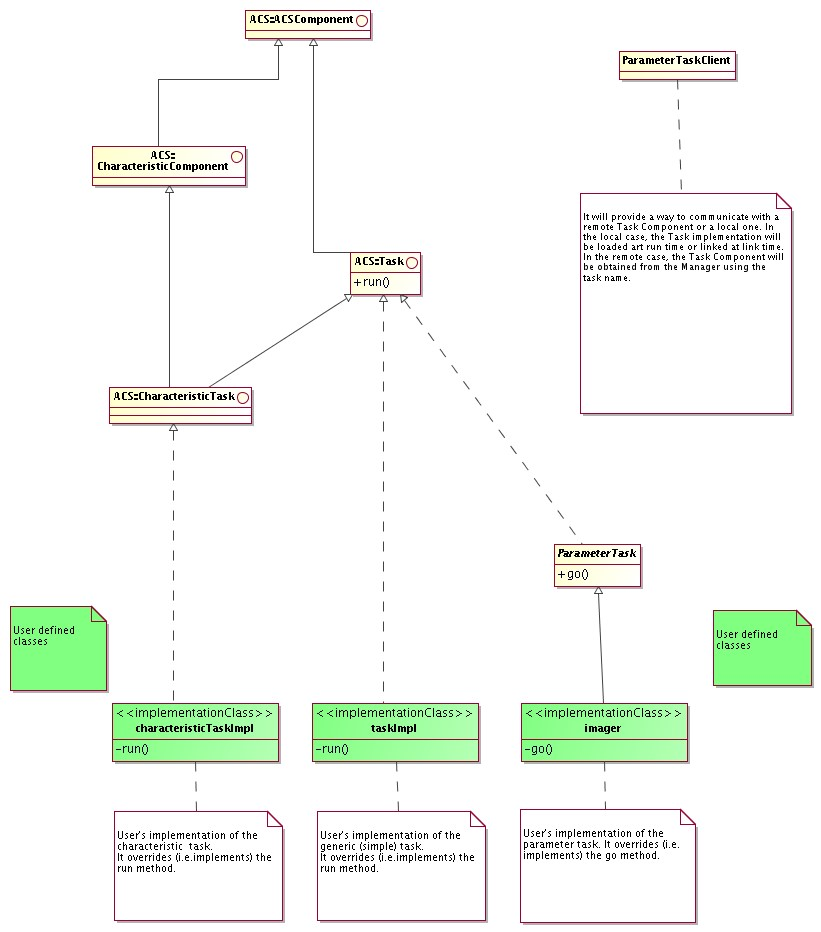

- Task

A task is a concise program which starts up, performs some processing, and then shuts down. A task may or may not require other more advanced ACS services, depending on context.

- Scripting

Scripting language and access libraries for the integration with ACS core components.

- ACS Application Framework

Implementation of design patterns and to allow the development of standard C++, Java and Python applications using ACS services.

- ACS Installer

Tolls for installing ACS with different options.

- - Integrated APIs and tools

The 5th group of packages contains software that is used by many ALMA Subsystems, but that is not used by other ACS packages. These packages are for convenience integrated and distributed together with ACS but are not integral parts of ACS. The list of packages will be extended according to the ALMA integration needs. If considered useful, some packages can be hidden on the back of an ACS abstraction layer with the purpose of facilitating the usage of the package and its integration with other ACS facilities (like error and alarm handling). In this case the package would be moved in layer 4 of the ACS architecture.

- Device Drivers

Low-level device drivers for commonly used devices

- Astronomical libraries

Libraries for astronomical calculations and data reduction.

- External libraries

Support for the handling of FITS files is just an example of other highlevel components that will be integrated and/or distributed as part of ACS.

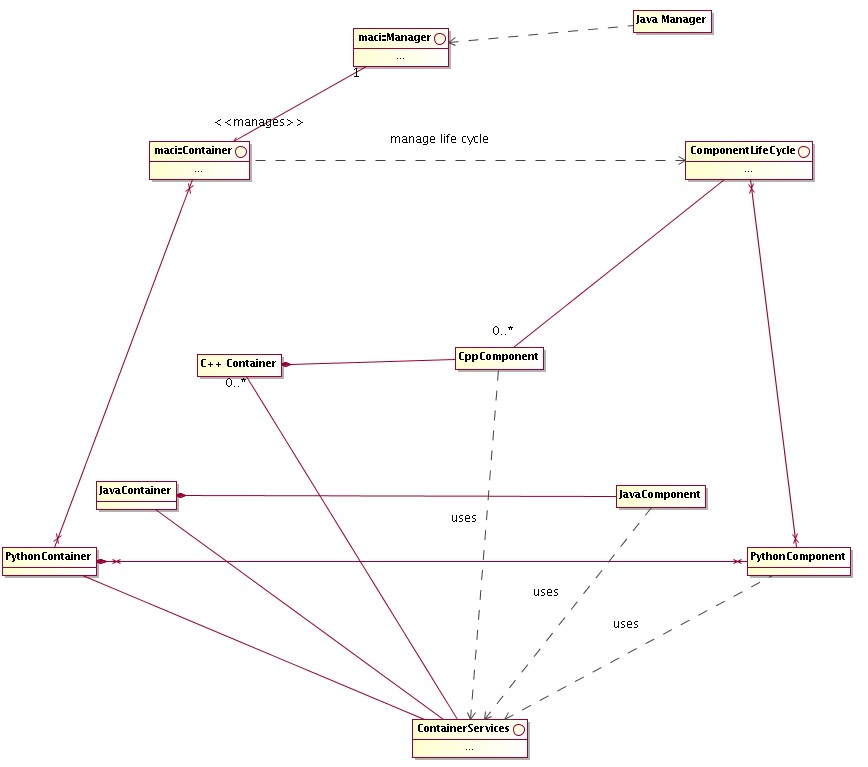

2.2Component Container model

The Technical Architecture in the ALMA Software Architecture document

[RD33]

identifies a Container-Component model for software organization and development as our primary instrument for achieving separation of functional from technical concerns.

A Component is defined in

[RD33]

as a software element that exposes its services through a published interface and explicitly declares its dependencies on other components and services, can be deployed independently and, in addition:

- "is coarse grained: In contrast to a programming language class, a component has a much larger granularity and thus usually more responsibilities. Internally, a component can be made up of classes, or, if no OO language is used, can be made up of any other suitable constructs. Component based development and OO are not technically related."

- "requires a runtime environment: A components cannot exist on its own, it requires something which provides it with some necessary services." This "something" is called a Container.

- "is remotely accessible, in order to support distributed, component based applications."

The division of responsibilities between Components and Containers enables decisions about where individual components are deployed to be deferred until runtime. If the container manages component security as well, authorization policies can be configured at run time in the same way.

A Component is required to provide:

- a Lifecycle interface, so that the Container where it resides can manage it

- a Service interface, that is the interface it exposes to clients

Architecture

A Container is required to provide:

- an implementation for all basic services used by the Components

- a ContainerServices interface used by the Components to get access to the services

ACS provides a simple implementation of the Component-Container model implemented in C++, Java and Python.

In order to decouple the Component and Container implementations, the ACS Component package contains the definition of all the interfaces needed for the implementation of Components and the default implementation of the interface which can be used as base classes for the Components themselves.

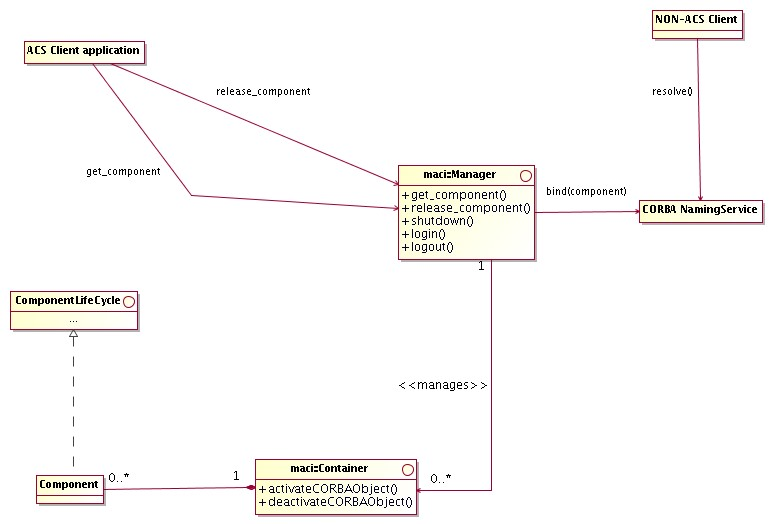

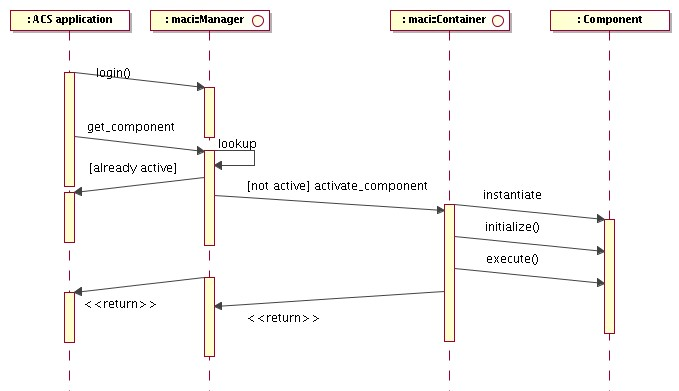

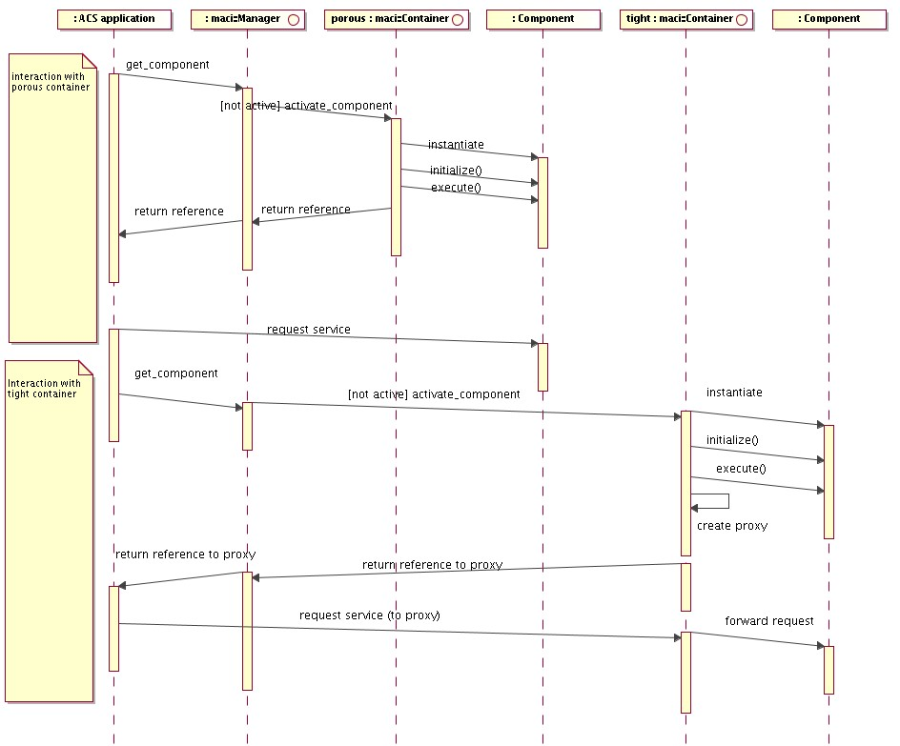

The ACS Container package contains the actual implementation for the Container model, including the high level management of Containers (Manager). The following diagram provides an overview class diagram of the ComponentContainer model. More details are given in the description of the Component and Container architectural packages.

Architecture

Figure 2.2: ACS Component-Container overview class diagram

For a more detailed discussion of rationale behind the choice of the ComponentContainer model, see the ALMA Software Architecture document

[RD33]

.

2.3Deployment

The choice of CORBA for the implementation of a Component/Container model and of all services that are part of the previously described packages makes it possible to have every software operation available in a transparent way both locally and at the Control Center in San Pedro. This applies also to all data, logs and alarms

[RD01 - 12.1.6 Location]

. The choice for the LAN and access optimization mechanisms, described in the following sections, will ensure that no significant degradation in performance will take place

[RD01 - 3.2.4. Local and central operation]

.

In principle, this same mechanism allows reliable remote access from the US and Europe, although with reduced performance. It is anyway necessary that applications are designed in order to prevent unauthorized access and undesired side effects on the performance of the control system

[RD01 - 3.2.5. Remote access]

. ACS provides the basic building blocks for the implementation of these mechanisms.

All packages in the lower ACS layers are available for developing code both for the Linux

[RD01 - 10.5.4 OS]

and the VxWorks

[RD01 - 10.5.3 RTOS]

platforms. The usage of the ACE C++ toolkit allows writing portable code that can migrate, for example, from Linux to VxWorks and vice versa according to development and run-time needs.

Real Time Linux has replaced VxWorks as the real time development platform in ALMA (limited support for VxWorks is maintained for other projects). In this configuration, only hard real time tasks will run in the real time kernel, while every other task will run in the normal Linux kernel. ACS provides "bridge modules" that allow the real time tasks to communicate with non real time tasks in a standard way. For example, a logging "bridge module" allows the generation of logs on the real time side and their further propagation on the non real time side.

Higher-level components are not usually needed on the real-time platform. In this case Java will be used to provide portability among non real-time platforms (for example Linux and Windows). This will apply in particular for user interface applications.

Some development tools can be required to run or can be more convenient to use on Windows platforms.

Via CORBA, all objects publishing an IDL interface will be available to any environment, host and programming language where a CORBA implementation is available. In particular it will be possible to write client applications for

Components in any CORBA-aware platform. ACS explicitly supports C++, Java, C and Python

[RD01 - 10.3.3. Compiled Languages]

[RD01 - 10.5.6. Scripting language].

The ACS Component/Container model is then implemented in C+, Java and Python. The three implementations are not identical, but are tailored to the specific application domain of the language. For example, the C+

implementation provides strong support for the

Component/Property/Characteristic pattern of interest for Control Applications

Architecture

and provides a more sophisticated threading model handling of interest for the Pipeline and Offline Data Reduction applications. On the other hand, the Java implementation provides Tight Containers and transparent XML serialization.

The ACS installation procedures will allow selecting the installation platform and will allow selecting between development and run time installations. For a development installation, all development tools will be installed, including compilers and debuggers, while a run-time installation will be much lighter and include only the libraries and components needed at run-time. There is also a separate installation for "pure Java" applications that will be installable and usable from any machine supporting a Java Virtual Machine. This is based on Web Start technology to make installation as simple and automatic as possible, as well as software upgrades.

Per each package, it will be specified at design time what components will be available on each platform and for run-time and development installations.

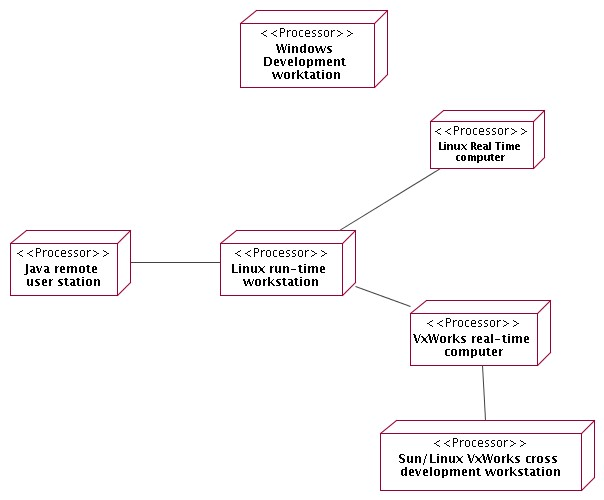

We foresee 7 different types of deployment nodes (connections in the diagram show the foreseen communication paths among node types, for example a Remote User Station is allowed to communicate only with a Linux run-time workstation):

Figure 2.3: ACS Deployment

- Linux Development workstation

Linux is the main development and run-time platform.

This installation includes all ACS components necessary for development and at run-time.

- Windows Development workstation

Windows is a development and run-time platform for Java based applications and user interfaces. In some cases and in particular for very high level tools that have to be installed on the premises of astronomers, it is explicitly required that they be supported on various platforms. Developing not only from a Linux but also from a Windows environment would help in ensuring better compliance with Java Virtual Machines on various platforms.

An ACS Windows Development installation will allow installing Java development tools and Java libraries for development, including CORBA ORB and services.

We assume that an ACS developer will have a Windows desktop used for

Java development and as a terminal to connect to a Linux Development Workstation. This does not exclude users having access only to Linux workstations but limit their capability and/or comfort in developing Java applications.

- Linux Real Time Computer

Linux real time computers can be normal PCs or VME racks with Intel CPU running a real time Linux kernel.

- Linux run-time workstation

When disk space is an issue for small run-time only boxes, it will be possible to deploy on ACS runtime libraries and components. The main limitation of this configuration is that it offers very poor debugging capabilities in case of problems, with respect to a full development installation

- Remote user station

A very light ACS installation will be provided for users that need only to run Java/Python applications to remotely interact with other ALMA subsystems. This will allow installing only Java/Python ACS run time libraries and applications (including CORBA ORB and services) on Linux, Windows or other operating systems supporting a Java Virtual Machine (officially supported and tested Java Virtual Machines will be defined at each ACS and specific application release) or a Python interpreter.

- VxWorks real-time computer (not used in ALMA)

VxWorks computers are used only as run time platforms, as a crossdevelopment computer is necessary to develop code. ACS will deploy on VxWorks only run time libraries, which are downloaded from a file server at boot time or when needed.

VxWorks computers have been phased out from ALMA and replaced by computers running a real-time Linux kernel.

- Linux VxWorks cross-development workstation

The VxWorks cross development environment is installed on a Linux workstation. This includes all development tools and ACS components. Typically the same development workstation should be used for Linux and VxWorks development. We are constrained by the fact that WindRiver does not support Linux as a VxWorks cross-development environment for the version of VxWorks we support in ACS. We have now a cross development environment for Linux, but we cannot run Wind River debugging and development tools there. Therefore we use normally Linux for cross-development, but it might be convenient to keep available Sun workstations when we need to run Wind River development tools. Sun has been phased out from ALMA together with VxWorks.

3ACS Packages

This chapter contains one section per each ACS package, describing the features provided and its high level architecture.

The packages are described, looking at the package diagram in Chapter 2, starting from the lower layer and from left to right.

For some packages, just a brief description is given. In particular this applies to packages that are just the integration of off the shelf components.

3.1Development tools

Packaging of tools necessary for the whole life cycle of software developed with ACS. This includes for example compilers, configuration controls tools, languages, debuggers, documentation tools and general purpose class libraries. The complete list will be defined at each release. ACS will assume a specific set of supported Operating Systems and versions.

3.2CORBA Middleware

Packaging of off-the-shelf CORBA implementations (ORB and services) to cover the languages and operating systems supported by ACS.

This includes also all CORBA Services used by ACS.

The complete list of ORBs and Services will be defined at each release. The ACS distribution contains the binary version of the ORBs and Services and allows rebuilding the binaries from sources. Whenever possible the original distribution from the vendor, in the right versions, is provided; in some case specific ACS patches are distributed together with the original distribution.

3.3ACE

Distribution of the Adaptive Communication Environment

[RD23]

. This C++ class library provides support for cross-platform portability and implementation for a wide set of design pattern specific for distributed systems.

3.4ACS Component

The requirements document

[RD01]

specifies as a basic design requirement the adoption of an Object Oriented architecture based on distributed objects

[RD01 - 13.1.1 Distributed Objects and commands]

.

The Technical Architecture in the ALMA Software Architecture document

[RD33]

identifies a Container-Component model for software organization and development as our primary instrument for achieving separation of functional from technical concerns.

This concept is the basis for the architecture and all services provided by ACS are designed around Components.

3.4.1 Every Component must implement the ComponentLifeCycle interface. This interface foresees the following basic lifecycle operations:

- initialize – called to give the component time to initialize itself, e.g.

retrieve connections, read configuration parameters, build up in-memory tables…..

- execute – called after initialize() to tell the component that it has to be ready to accept incoming functional calls any time

- cleanup – the component should release resources in an orderly manner, because shutdown is imminent

- aboutToAbort – the component will be forcibly removed due to some error condition.

3.4.2The Container passes to each Component a ContainerServices object (in C++ this occurs at construction time, while in Java at initialize() time, because of the different traditional ways of using constructors and destructors in the two languages). At that point, the Component can assume that all infrastuctural services it may need have been properly set up by the Container and are available via the ContainerServices object.

3.4.3The architecture of the system is based on CORBA. Component and Container interfaces are described in IDL, and Components and Container are implemented as CORBA objects. The impact of CORBA on component implementation classes is very low or none, varying among languages.

3.4.4For exceptional cases, a component can explicitly ask the container to create an associated CORBA object (called an "OffShoot") and then pass it around in the system.

3.4.5ORB independence and interoperability

[RD01 - 10.4.2 ORB Independence]

is ensured by basing the Distributed Object implementation on CORBA Inter-ORB

Protocol (IIOP) and Portable Object Adapter (POA) and by not allowing the use of any ORB-specific feature. Interoperability between ORBs has been demonstrated with the the Kitt Peak Test and with the previous releases of ACS, where we have changed ORBs a number of times. The selection of the final ORBs needed for ACS is not part of this Architecture document. The current baseline includes TAO

[RD07]

for C/C++, JacORB

[RD34]

for Java, omniORB

[RD15]

for Python bindings, OpenOrb

[RD16]

for some code generation tools.

3.4.6ACS provides a C+, a Java, and a Python Container Implementation. The three implementations differ in the features offered because C+, Java and Python applications have different requirements, as described in

[RD33]

. Features from one type of component can be implemented also for the other type, if the requirement arises.

3.4.7ContainerSercices and ComponentLifeCycle interfaces are NOT defined as IDL interfaces, since they are used only in the internal communication between Components and Containers and there are language specific differences.

3.4.8Basic functionality provided by ContainerServices is:

- getName()

- getComponent(name)

- findComponent(type)

- releaseComponent(name)

- getCDB()

- getThreadManager()

For more details see the implementation and detailed design documentation. In particular there are various flavours of the getComponent() interface, some described here after.

3.4.9Specific Containers can provide specialised subclasses of ContainerServices with additional features. Components aware of this additional functionality can make use of it, while other Components would ignore it transparently.

3.4.10 Normally a Container will provide each Component with a specific instance of ContainerServices that contains specific context information, but this is left to the responsibility of the Container. Therefore the life cycle of the ContainerServices objects is left to the complete control of the Container.

--------------------------------------------------

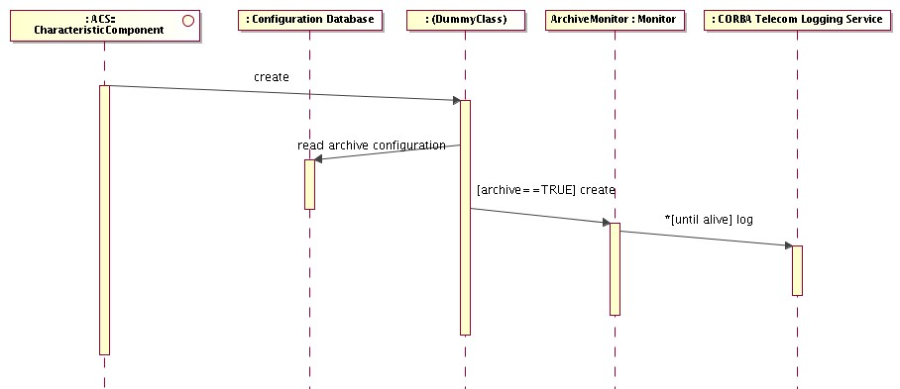

3.4.11 Characteristic Components are a subclass of Components tailored to the implementation of objects that describe collections of numerical (typically physical) quantities. They have been designed in particular to represent Control

System objects with monitor and control points or objects with state and configurable parameters. In particular Characteristic Components are described using a 3 tier naming for the logical model

[RD03]

[RD04]

[RD05]

:

- Characteristic Component

- Property

- Characteristic

3.4.11.1 Characteristic Component - Instances of classes identified at design level in the ALMA system, with which other components of the system interact, are implemented as Characteristic Components. In particular, at control system level, Characteristic Component is the base class used for the representation of any physical (a temperature sensor, a motor) or logical device in the control system. Higher level applications can use Characteristic Components to implement any Component that has configurable values representing numerical quantities.

3.4.11.2 Property - Each Characteristic Component has 0..n Properties that are monitored and controlled, for example status, position, velocity and electric current.

3.4.11.2.1 Properties can be read-only or read/write. If a read/write property cannot read its value back (for example it is associated with a write-only physical device), it caches the last written value and returns this upon read request. This implementation is mandatory and must be documented in the property documentation.

3.4.11.2.2 Properties can represent values using a limited set of basic data types:

- long, longLong and uLongLong for integers • double for floating point numbers

- string for strings.

- pattern to handle patterns of bits, typically from hardware devices

- enum for enumerations like states. This includes a boolean TRUE/FALSE enumeration.

- sequence<scalar property> of one of the previously defined scalar property types. A Sequence<scalar property> is a sequence (in CORBA IDL terms) of properties of a given scalar type, i.e. each item in the sequence is a complete property of the given scalar type. It is implemented as an IDL Sequence of the scalar property type. For example a sequence<long> allows manipulating a group of properties of type long. Each item in the list can be assigned to a long property object and manipulated (reading characteristics and value) independently from the others.

- scalarTypeSeq of one of the previously defined scalar types. A scalarTypeSeqis a property type that contains as value an array of values handled by the corresponding scalar type. For example, a longSeq is a property type with a single set of characteristics that apply to an array of integers. It is a single property and its value is an array of values. With respect to sequence<scalar property>, scalarTypeSeq is much more efficient for transporting big tables of data. (Not all types implemented for

ALMA)

- complex for handling complex numbers (Implementation not foreseen for ALMA)

- structures built with properties of the other basic types. Since structures introduce a significant increase of complexity in the handling libraries, they will be implemented last and only if a clear need arises.(Implementation not foreseen for ALMA)

3.4.11.2.3 The selection of a limited set of type is motivated by the need of avoiding implementing the same code for many different types and conversion problems between similar types (like short, int and long). Also, nowadays saving a couple of bytes using a short instead of a long usually introduces performance problems (CPUs now always works with longs and every operation on a short requires a conversion to long)

3.4.11.3 Characteristic - Static data associated with a Characteristic Component or with a Property, including meta-data such as name, description, version and dimensions, and other data such as units, range or resolution. Each Characteristic Component or each Property has 0..n Characteristics.

An initial list of Characteristics for Characteristic Components and Properties has been agreed and more details are given in the design documentation:

- Characteristic Components and Properties: Name, Description, Version and URI of extended documentation, where the last is optional and would point to documentation generated automatically from the source code.

- Read-only and Read/Write Properties: default values, range, units, format, resolution

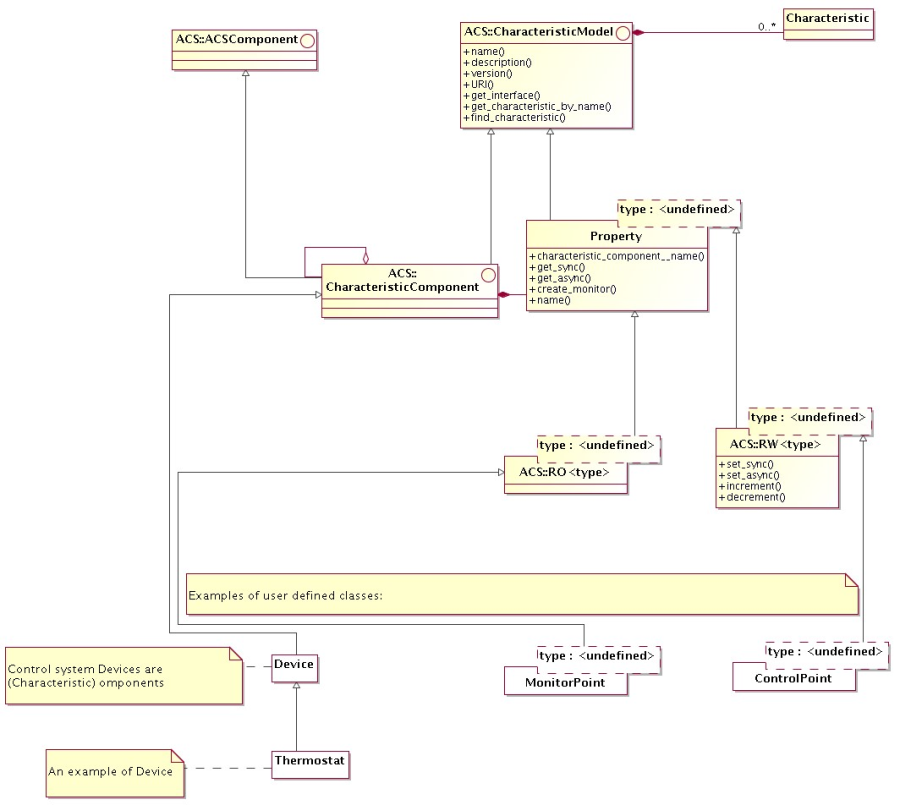

3.4.11.4 The following diagram shows an architectural class diagram for the Characteristic Component - Property - Characteristic pattern

Figure 3.1: Characteristic Component - Property - Characteristic class diagram

- The diagram shows the classes that have an IDL public interface and is not concerned with actual implementation of the servants that realize these interfaces.

- A CharacteristicModel base interface class groups methods and attributes common to both Property and Characteristic Component. In particular, both have a common set of Characteristics and provide the related access methods.

- A Characteristic Component can reference other Characteristic Components, to build a hierarchical structure

- Properties are always contained into a Characteristic Component. This means that a Characteristic Component can contain 0 or many Property instances, while a property is always contained in one and only one Characteristic Component. The Property class provides a method to retrieve the reference to the Characteristic Component that contains it.

- From the base Property class, subclasses for each read only and read/write types are derived. This is represented in the diagram by the ROProperty<type> and RWProperty<type> parametrized classes. From an architectural point of view, RWProperty<type> classes are subclasses of the corresponding ROProperty<type>.

- The lower part of the diagram (white class boxes) shows how applications will inherit from the base classes provided by ACS. The example shows classes used for the implementation of the control system.

- This diagram is sufficient and correct at architecture level. At design level, we have introduced some intermediate class levels to improve the modularity of the code. These intermediate classes are in any case hidden to the users, making the actual structure between the Property class and the implementation of RO and RW properties an implementation detail.

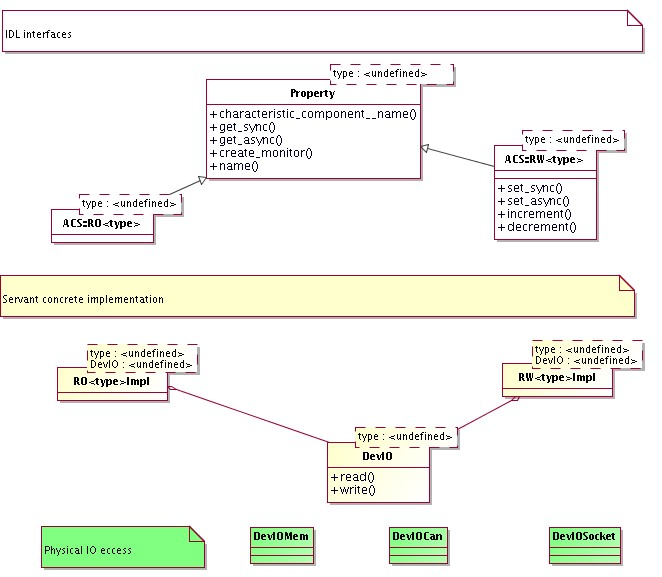

3.4.11.5 At the servant's implementation level, the classes implementing the Property interfaces are responsible for the actual interfacing with the hardware or, more in general, to retrieve/calculate the value for the numerical entities. In order to decouple as much as possible the implementation of Property classes and the access to different kinds of data sources, we have parametrized the default Property implementation provided by ACS with a DevIO parameter, as shown in the following class diagram. A DevIO implementation is responsible only for reading/writing the Property's value from a specific device (memory location, CAN bus, Socket connection, serial port, database….), therefore in most cases access to a new kind of device can be implemented just by implementing a new DevIO class. In more complex cases or for performance optimization it may be necessary to re-implement the entire Property interface.

Figure 3.2: Property Servant Implementation class diagram

3.4.11.6 Another common strategy to handle the case where a data source produces simultaneously the values for many Properties consist in mapping the Properties onto memory-based DevIOs and let a parallel execution thread update all memory locations from the data collected with one single access to the data source.

3.4.11.7 The Characteristic Components - Properties - Characteristics 3 tier logical model is very well established in the community of Control Systems for Physics Experiments

[RD03]

[RD04]

[RD05]

, where the name Device is used to identify what we call here Characteristic Component. We prefer to adopt the more generic name, as specified in

[RD01 - 13.1.1 Characteristic Components and Commands]

, because the usage of the ACS is not limited to the realm of the Control System, as in the case of the mentioned references. It provides instead generic services for the development of the whole ALMA software. Proper Device classes are implemented by the Control System development team based on Characteristic Components.

3.4.12 The Characteristic Component model is based on CORBA:

3.4.12.1 A Characteristic Component is a CORBA object

3.4.12.2 A Property is a CORBA object. A class hierarchy with Property class as root implements the basic read-only and read/write versions for the predefined types. This hierarchy provides standard IDL interfaces that shall be used by all clients to access Properties. On the implementation (servant) side, specific subclasses will provide polymorphic access to specific implementations like 'logical', 'simulated', 'CAN', 'RS232', 'Digital IO' and so on.

3.4.12.3 Public interfaces to Characteristic Components and Properties are defined as

CORBA IDL.

3.4.12.4 Characteristics of Characteristic Components and Properties can be accessed through access methods (as shown in figure) and through a generic value = get_characteristic_by_name(name) type of interface at run time. The interface of properties is defined by their IDL and the IDL is the same independently from the implementation (logical, CAN...). But specific implementations will have also specific characteristics. For example a CANLong property has a CANID characteristic. This means that from the property's IDL there is no way to retrieve the CANID using CORBA calls. We provide then a generic interface that can be used to retrieve any characteristic just querying by name. This allows accessing specific Characteristics, like the CAN ID for properties related to CAN monitor points that are not defined in the generic property IDL but are instead implementation specific.

3.4.13 The configuration parameters for all Characteristic Components, i.e. the initial

values for Properties control values and all Characteristics for Properties, are persistently stored in the Configuration Database

[RD01 - 4.2.1. Configuration Database]

. See the section on Configuration Database architecture.

3.4.14 Characteristic Components may have a state. Specific Characteristic Components can have additional sub-states.

[RD01 - 13.1.2 Standard Methods]

[RD01 - 14.1.13 States]

.

3.4.15 Note: A standard state machine and standard state transition commands could be defined. (Implementation not foreseen for ALMA).

3.4.16 JavaBeans wrap CORBA objects on the client side. Standard Rapid Application Development (RAD) tools like Eclipse

[RD35]

are used to handle them. Given the IDL interface of a Characteristic Component, a code generator automatically produces the corresponding JavaBean. In this way the developer has libraries that provide him direct support for ACS concepts like Characteristic Component/Property/Characteristic, Monitors, and Event and Notification System.

3.4.17 ACS also provides a generic IDL simulator (see section IDL Simulator) to simulate an entire Component.

3.4.18 If an application wants to provide a more sophisticated level of simulation (for

example simulating interrelations between the values of properties), a specific

simulated device should be implemented in parallel to the real device. Switching from the real to the simulated device is handled in the configuration of the Manager (see Management and Access Control section), telling it to start a different implementation of the same device's CORBA interface.

3.4.19

Note: As an extension, ACS could provide support for simulation[RD01 - 3.3.4.

Simulation] at Property level. All properties that access hardware can be switched in simulation by setting TRUE a simulation characteristic in the configuration database. After this, they behave like "logical properties". This provides basic simulation capabilities. (Implementation not foreseen for ALMA).

3.4.20 Direct Value Retrieval

3.4.20.1 The Property classes provide get() and, in case of writeable Properties, set() methods that can be used to directly access the value of the property from clients

[RD01 - 4.1.1 Direct value retrieval]

. Both synchronous and asynchronous get() and set() methods are provided.

3.4.20.2 Value setting is done using set() property methods. These methods can be called by applications or by specifically designed GUIs. CORBA Dynamic Invocation Interface allows to write generic applications and GUIs (like the Object

Explorer) that are capable of resolving dynamically at run time the structure of Characteristic Components and call set() methods to set the value of Properties

[RD01 - 3.2.3. Value setting]

.

3.4.20.3 DevIO specialization is used to implement properties accessing specific hardware devices, like CAN, RS232, GPIB. CAN properties will always directly access the hardware on the CAN bus at direct value retrieval and not use cached values.

3.4.21 Value Retrieval by Event

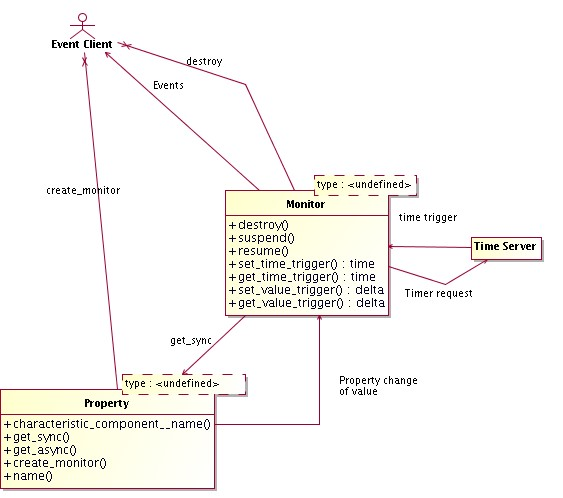

3.4.21.1 The Characteristic Component provides a method to create a monitor object for a Property, able to trigger events on Property change or periodically. A callback will be connected to the event and will be called by the monitor object when the specified event occurs

[RD01 - 13.1.4. Events]

. Triggered events are delivered directly to the registered object via the callback mechanism in a point-to-point fashion. The value retrieval by event is then very well suited for providing timely feedback to control applications.

3.4.21.2 Events can be generated on any change of value

[RD01 - 4.1.3 Rate]

.

3.4.21.3 Note: Other conditions, for example any write, value increase/decrease, value less or greater than set-point could also be included at a later stage. (Implementation not foreseen for ALMA)

3.4.21.4 Timed or periodic events can be generated as follows:

Periodic, at a specific interval rate

[RD01 - 4.1.3 Rate]

Periodic, at a specific interval rate, synchronized with an absolute array time

[RD01 - 4.1.5 Values at given time]

. This also allows periodic events aligned with the monitoring rate. For example, a 1-second rate generates events on the 1-second mark, 5-second rate on the 5-second mark and so on.

Figure 3.3: Value Retrieval by Event: ACS Monitors

3.4.21.5 All events will be time stamped with the time at which the value has been acquired (as opposed to the time of delivery of the event). Timed events will be triggered "timers" such that the requested time is the time of acquisition of the value, and not the time of delivery which depends on the network characteristics.

3.4.21.6 The monitor class provides methods to suspend, resume and destroy the monitor

itself.

3.4.21.7 Note: CAN-Properties will have to implement notification on change also for CAN

monitor points although CAN monitor points do not provide a specific support via HW or in the drivers. This can/should be done via polling. If there are clients registered on events on change, an ACS monitor is used to poll and to generate events in case of change of the value of the monitor point. The poll rate is defined by the characteristic change frequency. The polling frequency determines the time resolution of the eventon-change. (implementation not foreseen for ALMA)

3.4.21.8 Note: For performance optimization, the final implementation will not leave to the single Characteristic Component Properties the responsibility of managing timers, but a local centralized manager will take care of that, transparently to client applications. More details will be given in the ACS design. (implementation not foreseen for ALMA)

3.4.21.9 Note: A particular case is a Characteristic Component State Machine. A State Machine class is a Characteristic Component and the current state is represented by a State Property. This State Property can fire events whenever the state changes to allow external objects to monitor it. (implementation not foreseen for ALMA)

3.4.22 Supported implementation languages

The Characteristic Component / Property /characteristic pattern is implemented in C++ and in Java and Python

Note: Java and Python implementations are not complete and contain now all and only the features used in ALMA.

--------------------------------------------------

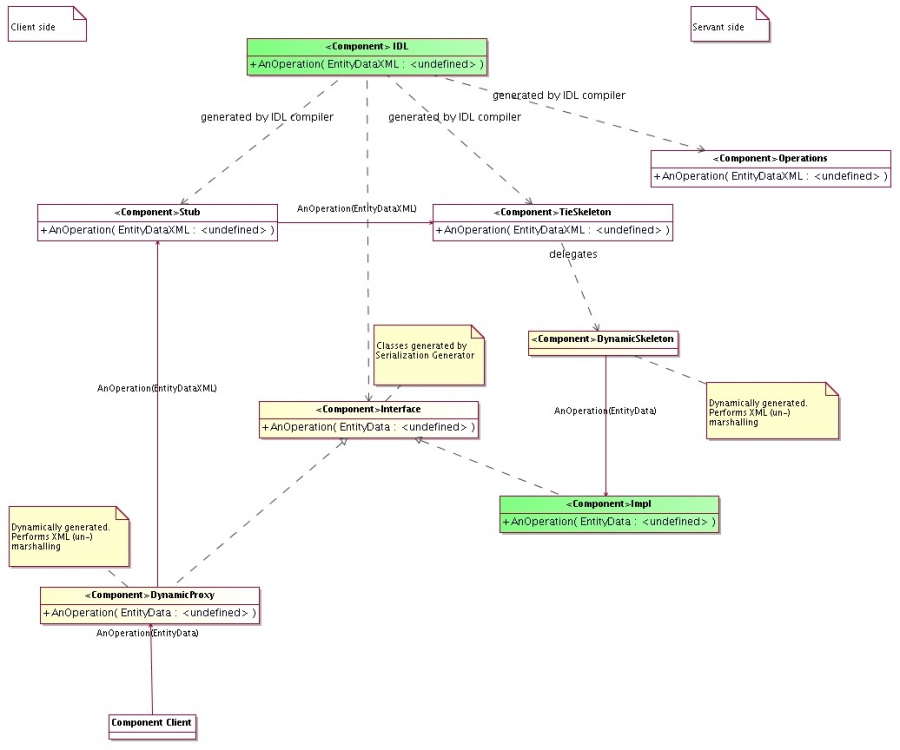

3.4.23 ACS Java Components provide explicit support for the transparent serialization of instances of entity classes

[RD01 - 3.3.2. Serialization]

. See the section on "Serialization".

3.5Configuration Database

3.5.1The configuration parameters for all Components and, in particular, for all

Characteristic Components, i.e. the initial values for Properties values and all

Characteristics for Properties, are persistently stored in the Configuration Database

[RD01 - 4.2.1. Configuration Database]

. Any application can make use of the Configuration Database to get access to configuration information.

3.5.2 There are 4 different issues related to the problems addressed by the CDB:

- input of data by the user

System configurators define the structure of the system and enter the configuration data. Easy and intuitive data entry methods are needed.

- storage of the data

The configuration data is kept into a database.

- maintenance and management of the data (e.g. versioning) Configuration data changes because the system structure and/or the implementation of the system's components changes with time and has to be maintained under configuration control.

- loading data into the ACS Containers

At run-time, the data has to be retrieved and used to initialize and configure the Components.

The main objective of the CDB Architecture is to keep these 4 issues as decoupled as possible so that:

- We can develop them separately, in space and time, according to the project priorities and the availability of resources.

- We can eventually use different technologies

- We can, in particular, support multiple data storage mechanisms (like ALMA Archive, RDBMS and XML files) in different environments (development, testing, final running system).

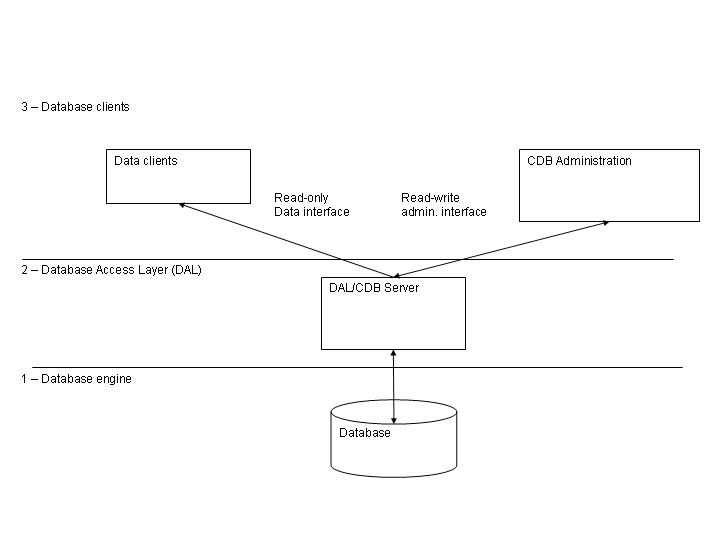

3.5.3The high-level architecture is based on three layers:

- The Database Itself

It is the database engine we use to store and retrieve data.

- The Database Access Layer (DAL) is used to hide the actual database implementation from applications, so that it is possible to use the same interfaces to access different database engines, as described in the requirements and discussed in the following sections.

- The Database Clients, store and retrieve data from the database using only the interfaces provided by the DAL.

Data Clients, like containers, Managers and Components retrieve their configuration information from the Database and are involved in issue 4. On the other hand, CDB Administration applications are used to configure, maintain and load data in the database using again interfaces provided by the DAL layer. They are involved in issue 1 and 3 and will be possibly using DAL interfaces different from the ones used by the Data Clients, as will be discussed later on.

Notice that Data Clients need only data retrieving and not data change functions.

Figure 3.4: Configuration Database architectural layers

3.5.4A Database Loader application is used to manipulate database description files and load them into the Database using the DAL administrator interfaces. Database Description Files are XML files and XML Schemas are used to define classes of database entities, allowing to use inheritance and to define default values. The Database Loader also takes care of validating the data, to make sure that only valid and consistent data is actually loaded into the database. For example, the following inconsistencies should be spotted and reported:

- missing property or characteristic

- undefined values, default will be used

- defined values that are not used elsewhere

3.5.5 When a Characteristic Component is instantiated, it configures itself according to the configuration stored in the Configuration Database

[RD01 - 3.3.2. Serialization]

.

3.5.6The implementation of the Configuration Database is hidden in a Configuration Database Access API to allow switching among alternative implementations

[RD01 - 4.2.2 Database Design]

.

3.5.7The reference implementation is based on XML files and a Java application loads and parses the XML files. Files are validated at run time and a separate CDB checker can be used to validate offline CDB XML files without the need of loading them in a "live" database.

3.5.8In ALMA, configuration information is stored in the Telescope and Monitoring Configuration Data Base (TMCDB). This is based on the Oracle RDBMS (with the small-footprint RDBMS, hsqldb, used for small-scale testing). Archive, Control and ACS subsystems are responsible for the implementation of the Configuration Database Access API on top of the TMCDB, throught the implementation of a specific Component. This implementation includes roundtrip tools to convert CDB instances between the XML reference implementation and the TMCDB. In this way it is possible to develop locally with the XML implementation and transparently migrate to the final system based on the ALMA Archive and TMCBD. (Being enhanced).

3.5.9All Components have access to the Configuration Database during construction/initialization and later during their operational life. While accessing the CDB is optional for normal components, Characteristic Components must retrieve their configuration information from there.

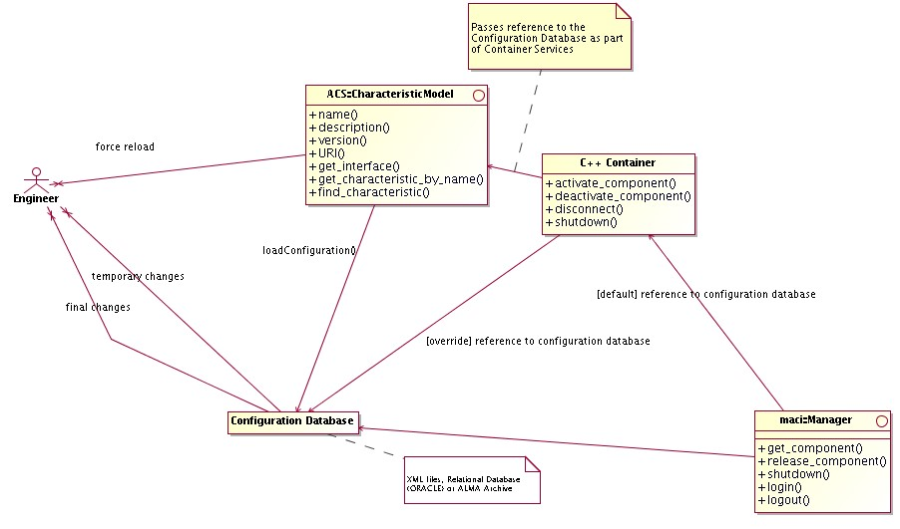

At a higher level, the Container responsible for the Components (see Container package) provides an interface to set the reference to the configuration database used. In this way it is also easy to switch between different databases at startup time.

- In order to allow switching between different instances of Configuration Database, the reference to the DAL used by each Component is provided as a common service by the Container inside which the Component lives.

- Whenever a Container is started, it gets in touch with a specified DAL instance.

- By default, if nothing else is specified, this is the DAL for Central Configuration Database and is obtained by requesting the reference to the Manager.

- Otherwise, the container can be instructed to use explicitly another DAL instance, apt to work with a Configuration Database Engine of one of the supported types.

Figure 3.5: Configuration Database

3.5.9.1We define Characteristics as statically defined in the Configuration Database. This means that is possible to change their value only by changing the configuration database and reloading just the corresponding Characteristic Components

[RD01 - 14.1.9 Dynamic configuration]

. This means that they cannot be used for, e.g., calibrations that change at run time. With this definition, calibration values that change at run time should be implemented as Properties, not as Characteristics. "Static" calibration values, for example measured by an engineer and not supposed to change for months can be Characteristics. Characteristics can change with time or can change with the context.

3.5.9.2Note: It is possible to implement also a more dynamic mechanism, but we have not identified a requirement for ALMA. This can be done transparently at a later time extending the Property class with methods to change the value of characteristics but this has not been considered in the first design to avoid increasing the complexity of the system. (Implementation not foreseen for ALMA)

3.5.10 Note: A Visual Configuration Tool (CT) can be implemented on top of the Database Loader. This can be completely independent of all other aspects of CDB and therefore can be implemented at a later stage. ALMA configuration data is edited using the TMCDB tools and therefore no requirement for such Visual Configuration Tool has been recognized. The basic features are described hereafter for a possible future implementation (Implementation not foreseen for ALMA) • The CT allows to visually edit the structure/schema of the configuration database and to fill in the values inside the instantiated database.

- The CT supports expressions and variables.

- Allows switching between different views (think of Eclipse): source, members, hierarchy, editions, and visual composition. Like in Eclipse, there should be wizards that help create new structures, but the structures can be created also manually. Structures can be changed using connections and drag&drop.

- An existing CDB can be parsed and displayed visually, for easier re-engineering.

- There is a tree view in CT that uses colour codes and icons to define classes or properties that are abstract, inherited, calculated (from expressions) and imported from templates.

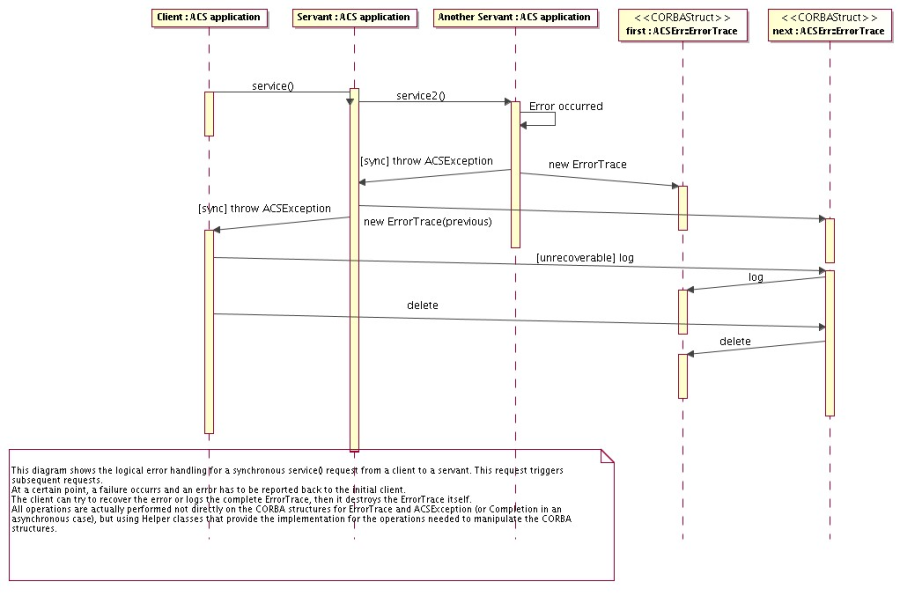

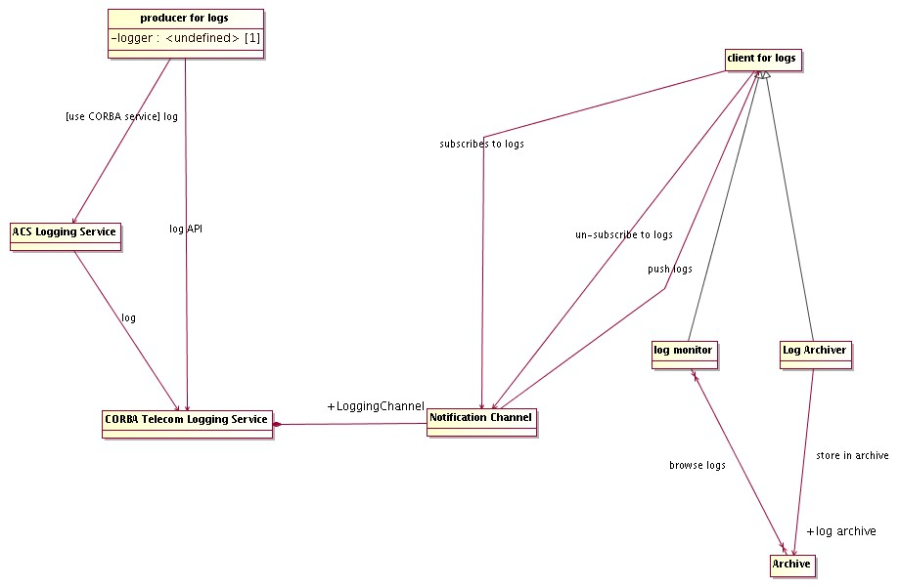

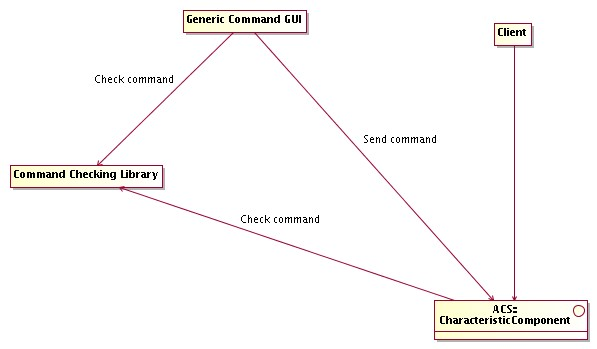

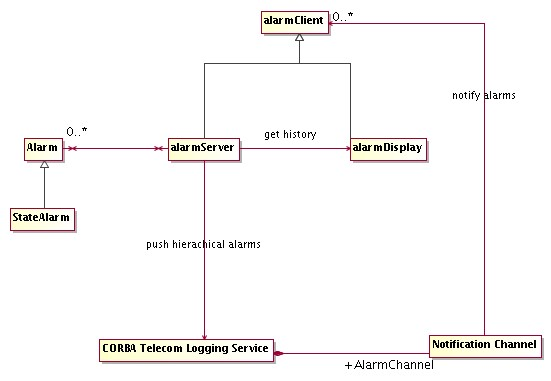

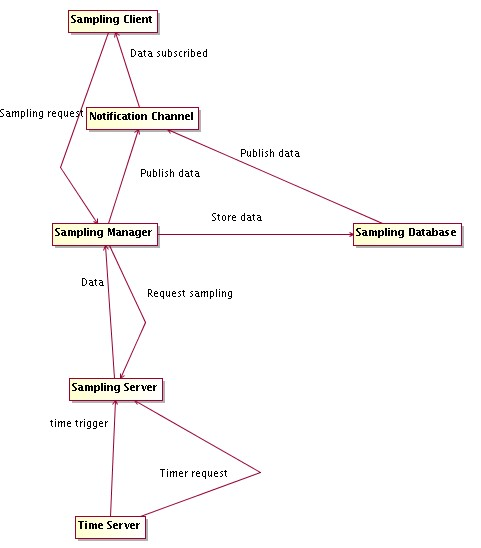

- A "spreadsheet view" is used for mass population of configuration data. The normal approach is to define the structure with the tools just described, and then to write a substitution file, which contains data in compact form in a table that is similar to a spreadsheet. Ideally, it should be straightforward to create such a substitution file in a spreadsheet program and use it from there directly. • The development of CT will be based on existing tools. For example it could be an Eclipse plugin.